July 23rd, 2024

How to Use Python for Statistical Analysis

By Zach Fickenworth · 7 min read

Python has become one of the most valuable tools in data science for a reason: it’s an extremely versatile programming language. Flexibility is its biggest plus point – you can create almost any app or piece of software you could think of for data manipulation.

You may already know this, but 44.6% of programmers use Python worldwide, so it’s not an underappreciated language. But you may not understand how to use the language for statistical analysis.

Why Use Python for Statistical Analysis?

You’ve already discovered one of the reasons – Python is extremely versatile. That makes it an excellent choice for creating models you can use for analyzing data and conducting statistical analysis.

Much of that versatility comes from Python’s open-source nature. Multitudes of programmers have already created Python libraries – which are usually freely available – that you can download and use for your own purposes. Contrast that to a closed programming language, such as Microsoft’s .NET framework. There, you’re limited only to the libraries and functionalities Microsoft makes available – there’s no room for creating exactly what you need if it isn’t already provided within the language.

The community that’s built around Python also goes a step further. Not only does it create the libraries you need for data analysis, but it’s also available to help you along the way. If you have queries, somebody has likely already asked (and received an answer to) your question. Throw in the language’s readability removing complexity and it’s easier to complete statistical analysis in Python than it is in many other languages.

How You Can Use Python for Statistical Analysis

So, you understand how Python statistics libraries – coupled with the language’s ease of use – make it a good choice to analyze data. Now you need to know the “how.” What statistical modeling techniques can you execute using Python?

Descriptive Statistics

When you just need to summarize the most basic features in your dataset, you need something to calculate descriptive statistics. Mean, mode, and ranges all fall under this umbrella, and there’s a Python statistics library for that job: Pandas.

Using Pandas’ toolkit, you can calculate things like the minimum and maximum values of a dataset – using the .min() and .max() functions – as well as work out a dataset’s mean and count the number of data points you have.

Inferential Statistics

Use Python’s scipy.stats module and you get access to ANOVA tests, t-tests, chi-square tests, and more – all useful in helping you make predictions based on data samples. Throw the statsmodel library into the mix and you’ll be able to perform regression analysis to model the relationship between a dependent variable and the independent variables that may affect it.

Multivariate Statistics

Interactions between variables and the dependencies that form between them – those are the two things you explore via multivariate statistics. And there’s a Python library for that, too: Sklearn.

Assuming you have at least three variables, you can use the sklearn library to run cluster and factor analyses, as well as principal component and discriminant analysis.

Hypothesis Testing

When testing hypotheses, you’ll have a null hypothesis – something you believe to be true – at the bare minimum. You may also have an alternate hypothesis, which, as the name suggests, is an alternative theory to what you believe to be true.

Python can help you test both. The scipy and statsmodels libraries allow you to stack your two hypotheses against each other to answer a simple question: Was my initial belief correct, or does the alternative hold true?

Correlation

After completing your descriptive statistics tests and confirming your hypothesis, you’ll often move on to establishing correlation. This is the measure of the direction and strength of a relationship between two variables in your dataset. The Panda and scipy libraries allow you to do this, with Panda even allowing you to plot heatmaps in Python – ideal for data visualization.

Regression

If you have a dependent variable and at least one independent variable, you’ll also have a question to answer: How much does the dependent variable change based on changes in the independent variable?

From there, you can determine if you can predict changes in a dependent variable based on changes in an independent one, establishing a dependency in the process. This is called regression, and there are several types – linear, logistic, and polynomial being at the forefront.

Python comes through again. With the seaborn, sklearn, or scipy libraries, you can evaluate relationships – should any exist – between these types of variables.

Visualization

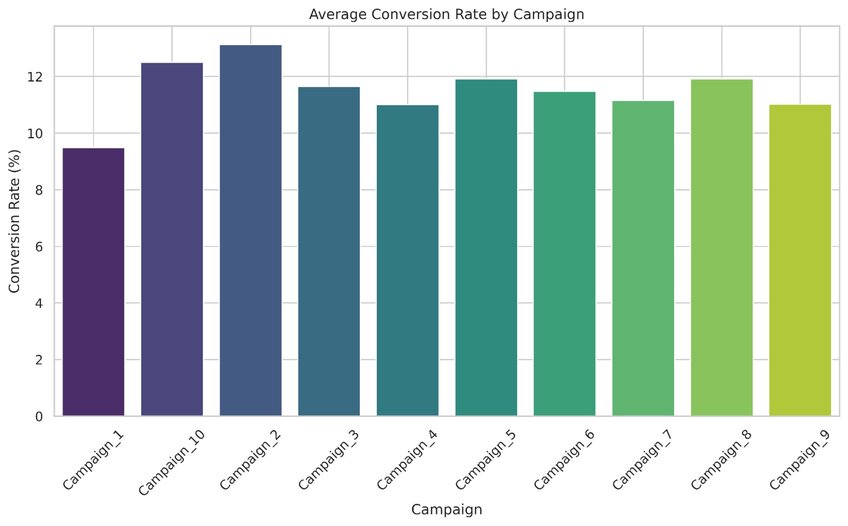

We’ve already touched on data visualization in Python – the Panda library allows you to create heatmaps, for instance. Many other libraries exist that allow you to visualize your data using graphical forms, such as charts and tables.

Why does that matter? Communication of the data you’ve derived is key. Visualization allows you to more easily see – and demonstrate – the results you’ve achieved.

Data visualization example that shows the average conversion rate by campaign. Created in seconds with Julius AI

Pros and Cons of Using Python

While Python is one of the stronger performers in scientific computing, it’s not a perfect language – there are both pros and cons to consider before engaging in Python statistical analysis.

Pros

Python’s beginner-friendliness is one of its biggest advantages. Even if you’re an inexperienced coder – or an expert in a different language – Python is written in a way that’s easy to understand. Then, there’s its open-source nature – there are dozens of Python libraries dedicated to data analysis, along with a strong community to help you on your way.

Cons

With an open-source platform comes some security concerns – less reputable “libraries” may actually be used to inject malicious code into an application’s backend. You’re less likely to face these concerns with a closed-source language because only the company operating that language can make changes to it.

There’s also a speed issue. Python is slower to execute than compiled languages – such as C or Fortran – which adds time to any statistical analysis you conduct.

Use No-Code Julius AI Instead of Python to Make Statistical Analysis Easier

As powerful as Python can be as a statistical analysis tool, there’s one inescapable issue you face when using it: You need to know how to code.

That’s a major issue if you’re not a computer science dab hand, and it’s a problem that’s going to make Python impossible to use if you don’t want to dedicate hours – even weeks – to understanding a new language.

You need an alternative. Enter Julius – a computational AI that’s capable of analyzing your data and providing expert insights in seconds. Not only is it a no-code solution, but it’s also much faster than Python while still being capable of providing visualizations and valuable insights.

Sign up to Julius AI today – a world of codeless data analysis awaits.

Frequently Asked Questions (FAQs)

What are the Python libraries for statistical analysis?

Python offers several libraries for statistical analysis, including Pandas for descriptive statistics, SciPy for hypothesis testing, and Statsmodels for advanced statistical modeling like regression. Additionally, Scikit-learn (Sklearn) is widely used for multivariate analysis, and Seaborn provides excellent visualization tools to complement your analysis.

How to calculate statistics in Python?

To calculate statistics in Python, you can use libraries like Pandas for mean, median, and standard deviation, or SciPy for advanced computations like t-tests or ANOVA. Import the library, load your dataset, and call the relevant functions (e.g., data.mean() for mean in Pandas or scipy.stats.ttest_ind() for a t-test).

What is the difference between NumPy and Pandas?

NumPy focuses on efficient numerical computations with multi-dimensional arrays, making it ideal for mathematical operations. Pandas, built on top of NumPy, offers more flexibility for data manipulation and analysis, especially with tabular datasets, providing tools like DataFrames and built-in support for handling missing data.

What is data wrangling in Python?

Data wrangling in Python refers to the process of cleaning, transforming, and organizing raw data into a usable format for analysis. Libraries like Pandas and NumPy are commonly used for tasks such as handling missing values, reformatting data types, and reshaping datasets to prepare them for deeper statistical analysis.