March 3rd, 2024

6 Types of Statistical Analysis (and How You Can Do Each Quickly)

By Connor Martin · 10 min read

Raw data is nothing but an unorganized collection of figures and measurements that lack context, structure, and interpretive value. In a word, it’s chaos. But use statistical analysis, and this chaos transforms into clarity, making data comprehensible and actionable in the process.

Now, there’s more than one way to get from Point A – chaos – to point B – clarity. Which way you’ll choose depends on the specific goals of your analysis, the nature of your statistical data, and the insights you aim to extract.

With this in mind, let’s look at the six types of statistical analysis and how you can perform each quickly.

What Are the Types of Statistical Analysis?

When it comes to different types of statistical analysis, two statistical methods are considered to be primary – descriptive statistical analysis and inferential statistical analysis. However, there are four more types of statistical analysis that are widely used across various fields. The types of statistical analysis include:

1. Descriptive analysis

2. Inferential analysis

3. Predictive analysis

4. Prescriptive analysis

5. Exploratory data analysis

6. Causal analysis

So, let’s explore each of these six methods in more detail.

Descriptive Analysis

In statistical analysis, it doesn’t get simpler than descriptive analysis. This statistical analysis type only serves to organize and summarize data using data visualization tools like charts, graphs, and tables. It’s all about describing the data you have in hand, and not drawing any conclusions from it.

However, once the previously complex and unruly data set has been broken into a more digestible and compact form using descriptive statistics, it becomes easy to apply a more advanced statistical analysis tool to arrive at the desired conclusions.

Descriptive analysis example of a plot visualizing the closing prices along with the 50-day and 200-day moving averages for Apple Inc. Created in seconds with Julius AI

Inferential Analysis

If conclusions are what you’re after, inferential statistics is the way to go. Using this statistical method, you can make inferences about a larger population based on a single representative sample of that population. Of course, “representative” is the key word here, as the quality of the interference directly depends on how well the sample represents the entire population.

Still, there’s always room for error, so every generalization made using inferential statistics also accounts for a margin of error. This means that while inferential analysis can provide valuable insights and predictions, there’s always a degree of uncertainty involved.

Though, to be fair, the generalization made from the sample can be validated (or disproved) by performing statistical tests (e.g., statistical hypothesis test).

Predictive Analysis

Predictive analysis looks into current and historical data trends and patterns to predict future outcomes. From this definition alone, you can probably conclude that fields like marketing, sales, finance, and insurance rely heavily on predictive analysis to forecast trends, customer behavior, and financial outcomes.

However, connecting the past to the future is no easy task. That’s why predictive analysis employs a range of probabilistic data analysis techniques to make an educated guess about what’s likely to take place next. Data mining, predictive modeling, and simulation are just some of those techniques.

Prescriptive Analysis

Data-driven decision-making is one of the primary reasons statistical analysis is used.

However, most types of statistical analysis only present data in a more digestible manner or help you draw actionable insights from it. They don’t actually tell you what to do next. But this doesn’t apply to prescriptive analysis.

This statistical method exists to suggest the best course of action based on the analysis of available data. In this sense, prescriptive analysis can be viewed as the final stage of data analytics after descriptive and predictive analysis.

The descriptive analysis is there to explain the data – what has already happened. The predictive analysis deals with what could happen. Then, you have prescriptive analysis to tell you which of the available options is the way to go.

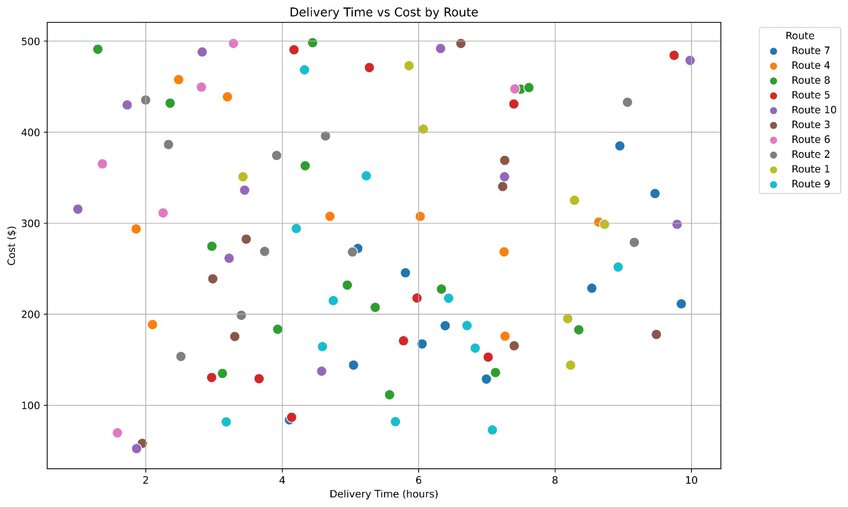

Prescriptive analysis example showing which routes are more efficient in terms of delivery time and cost. Created in seconds with Julius AI

Exploratory Data Analysis

Exploratory data analysis is generally the first step of the data analysis process, taking place before any specific statistical method is applied. Essentially, its goal is to infer the main characteristics of a data set before making any further assumptions. Using this method, analysts can truly understand the data set before further analyzing data, as well as uncover patterns, detect outliers, and find unknown (and interesting) associations within this data set.

Causal Analysis

Causal analysis seeks to answer a single question: why certain events or phenomena occur. Once you understand why something happened, it becomes easy to develop strategies to either replicate successful outcomes or prevent undesirable ones. That’s why causal analysis is widely used in the business world to optimize processes, improve performance, and drive innovation.

How To Conduct Statistical Analysis

Sure, there are several types of statistical analysis. However, they all generally follow the same step-by-step process.

Step #1 - Data Collection

There’s no data analysis without the data. So, Step 1 is collecting relevant data either firsthand (e.g., surveys, interviews, and observations) or through secondary sources (e.g., books, journal articles, and databases).

Step #2 - Data Organization

Step 2 consists of eliminating any obstacles to accurate data analysis. This involves identifying (and removing) duplicate data, inconsistencies, and other errors in the data set.

Step #3 - Data Presentation

In Step 3, you arrange the data for easy analysis. This step is also where some statistical methods (descriptive statistics) end.

Step #4 - Data Analysis

Step 4 is where the data starts to make sense. You apply statistical techniques to analyze the data and identify the relevant patterns, trends, and associations.

Step #5 - Data Interpretation

After analyzing the data, all that’s left to do is explain the meaning of your findings, considering the context of the research question or problem you’re investigating. For non-professionals, these findings should be presented in easy-to-understand formats like charts and reports.

Let AI Handle Your Statistical Analysis

When broken into simple steps, the statistical analysis process might seem simple. But in reality, getting from data collection to data interpretation requires a whole host of data analysis skills. The good news is that even individuals who lack these skills can end up with the same results. How? By employing statistical analysis software tools like Julius AI to do the analysis for them.

Learn More about How Julius AI Can Revolutionize Your Analysis Process

Julius AI is the ChatGPT of math and data science. In other words, all you need to do is input your data set, enter a few relevant prompts, and let this statistical software do its magic. Sign up for Julius AI today and witness the revolution of the data analysis process firsthand.

Frequently Asked Questions (FAQs)

What is statistical analysis in research?

Statistical analysis in research is the process of collecting, organizing, and interpreting data to uncover patterns, trends, and relationships. It helps researchers draw meaningful conclusions, test hypotheses, and make evidence-based decisions, turning raw data into actionable insights.

How to choose a statistical analysis method?

Choosing a statistical analysis method depends on your research goals, the type of data you have, and the questions you want to answer. Consider whether you’re looking to describe data, make predictions, identify patterns, or determine cause-and-effect relationships, as each method serves a specific purpose.

Why is statistics important in everyday life?

Statistics are essential in everyday life because they help us make informed decisions, from managing personal finances and understanding health risks to evaluating news and product reviews. By interpreting data, statistics bring clarity to complex information, making it easier to navigate daily challenges.