July 18th, 2024

5 Statistical Analysis Methods for Better Research

By Rahul Sonwalkar · 9 min read

Statistical analysis has a single goal – to make sense of raw data. This goal can be achieved in various ways, depending on the type of data and specific questions being asked.

Let’s say you just want to identify the characteristics of a data set. In that case, you might use exploratory data analysis. Similarly, you’ll reach for inferential statistics when you need to draw specific conclusions about the analyzed data.

But the job is not done once you select the appropriate type of statistical analysis for your data set. The next step is choosing the right statistical method to pull that analysis off.

So, what are your choices? This guide will cover the five most common statistical analysis methods that might come in handy during your data analysis (and research) endeavors.

What Is a Statistical Analysis Method?

Simply put, a statistical method is the specific procedure used to analyze data and draw conclusions from it.

Let’s take descriptive statistics as an example. The goal of this type of statistical analysis is to – as the name suggests – describe a data set by providing summaries about the sample data. But to achieve this goal, descriptive analysis will employ specific statistical methods, such as mean and median to summarize the data.

Similarly, inferential statistics will rely on statistical tests and statistical methods like hypothesis testing and regression analysis to extrapolate results from data.

What Are the 5 Statistical Analysis Methods for Research?

The following five statistical analysis methods are commonly used in research. Master them, and you’ll be well-equipped to tackle virtually any data analysis task.

Mean

The mean, often referred to as the average, is a fundamental statistical technique used to conduct statistical analysis. Meaning a data set simply describes the central tendency of the said data set. This is achieved by calculating the sum of all the values in the data set and then dividing by the total number of values. As simple as that!

But no matter how quick and straightforward this statistical analysis method is, it’s also highly useful. It allows researchers to determine the overall trend of a data collection, providing a single representative value that summarizes the data set. This allows for easy comparison between different data samples, making meaning a valuable tool in fields like economics, education, and psychology.

Standard Deviation

Not all data points will fall exactly at the mean. That’s why a statistical method called standard deviation exists – to quantify how spread out those points are. If the data points are widely dispersed from the mean, you’re dealing with a high standard deviation. In contrast, a low deviation indicates that most of the data points are close to the mean.

But why does the level of deviation matter? It matters because it provides crucial information about the variability or consistency of a data set. Only a data set with a low standard deviation can be considered reliable and, thus, be used to make accurate predictions and informed decisions.

Regression

Regression analysis deals with the relationship between a dependent variable and one or more independent variables. The goal of this statistical method is to predict the value of the dependent variable based on the values of the independent variables.

Take advertising as an example. Let’s say a company’s advertising expenditure is the independent variable, while its monthly sales are the dependent variable. By analyzing data on past advertising expenditures and corresponding monthly sales, an analyst can create a model that describes the relationship between these two variables. This model can then be used to predict future sales based on planned advertising spending.

The regression analysis used for this example is referred to as single-variable linear regression, as it focuses on only one dependent variable and one independent variable. There’s also multiple regression analysis, which tackles several independent variables at once.

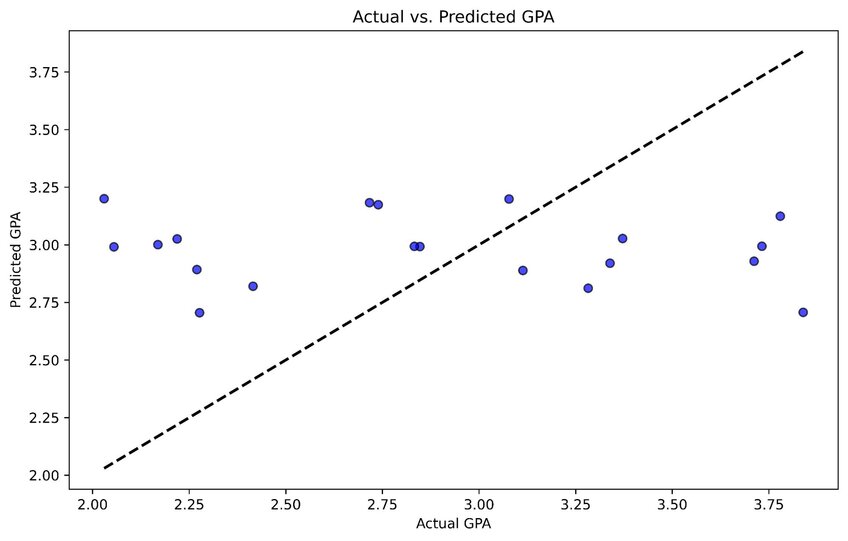

Example regression analysis of the relationship between actual and predicted GPA based on three predictor variables: hours studied, attendance rate, and participation in extracurricular activities. Created in seconds with Julius AI

Hypothesis Testing

In statistical analysis, researchers often come to a conclusion or a claim regarding the analyzed data set. But this isn’t where the analysis ends. They also have to substantiate whether the given claim or conclusion truly holds for that data collection. That’s where hypothesis testing comes into play.

There are two types of hypotheses you need for hypothesis testing:

1. The null hypothesis, i.e., the presumption you’re making about the data.

2. The alternative hypothesis, i.e., any other statement that contradicts the null hypothesis.

After hypothesis testing, you’ll know whether the null hypothesis is correct or whether it should be rejected. But keep in mind – the results of this testing should only be considered if they’re statistically significant, which means the findings are unlikely to have occurred by chance.

Sample Size Determination

Many statistical analysis methods aren’t applied to the entire data set. Instead, they only deal with a subset of the data, known as the sample.

But how do you know how big of a sample to choose for your analysis? By using another statistical method, of course! As its name implies, sample size determination helps calculate the appropriate sample size needed for reliable and accurate analysis.

Go too small, and you won’t get reliable results. Go too big, and you’ll just waste time and resources without gaining any additional benefit. Use sample size determination beforehand, and you’ll get just the right sample size for your research.

Tips to Improve Your Statistical Analysis Research

Knowing about the different statistical analysis methods you can use is just the beginning. There are a few additional tips to consider to make your research go off without a hitch:

- Improve your data collection process by only gathering the data relevant to your research.

- Speed up the data analysis by meticulously organizing the data beforehand.

- Regularly cleanse your data to keep it up to date (and accurate).

- Use AI-powered statistical analysis software to glide through the statistical analysis process.

Let Julius AI Handle Your Analysis in Just Minutes

There are statistical analysis software tools that can help you streamline the statistical analysis process by automating repetitive tasks, organizing data efficiently, and generating visualizations. However, there are also tools that can do the entire statistical analysis for you. Julius AI is one of those tools.

If you need to speed up your research, you’ll be happy to know that Julius AI can handle your entire analysis in a matter of minutes. Best of all? You only need to supply the data and instruct this handy tool on what to do with it. Try out Julius AI today to see just how easy to use it is firsthand.

Frequently Asked Questions (FAQs)

What are the different types of statistical methods?

Statistical methods can be broadly categorized into descriptive statistics, which summarize data (e.g., mean, median, standard deviation), and inferential statistics, which draw conclusions from data using techniques like hypothesis testing and regression analysis. Other methods include exploratory data analysis, which identifies patterns, and sample size determination, which ensures reliable results from subsets of data.

Why is statistical analysis important in research?

Statistical analysis is vital in research because it transforms raw data into meaningful insights, helping researchers identify trends, test hypotheses, and make informed conclusions. It ensures that findings are objective, accurate, and supported by evidence, thereby enhancing the credibility and relevance of the study.

Why is it important to use the correct statistical process?

Using the correct statistical process is crucial to obtaining reliable and accurate results. Incorrect methods can lead to flawed conclusions, wasted resources, or misleading insights, which undermine the integrity of the research. Choosing the right process ensures that the analysis aligns with the data type and research objectives.